Posts Tagged: Parametric Design

Responsive Environment with Grasshopper and the Magic Leap

In a previous project, I created an Augmented Reality environment that uses Firebase as the backend to send information back and forth between the computer, where the geometry is being created, and the phone (a Pixel 1), where said geometry gets populated and rendered. This time around, I decided to build a similar logic but using the Magic Leap.

It works as follows:

– The Unity Editor pushes the locations of the camera and the hands to the Firebase Database

– The visual programming tool Grasshopper reads those positions: The large sphere represents the head; the smaller spheres are the hands.

– I used those positions as the input for a responsive environment. A change in those positions triggers a change in the parametric design that then gets pushed to Firebase. In this case: the head movement triggers a change in the color of the pieces; the hands change their relative position.

– The Unity Editor then reads those changes and updates the geometry accordingly. One of the main issues I found is that the Database didn’t work in real time. Some of the changes took several seconds to get read and updated visually.

Unfortunately, there seemed to be incompatibility of the Firebase libraries with the Magic Leap and to show a proof of concept, I baked one of the versions of the parametric design and loaded it in Unity. I then wrote a script in Unity that works similarly to how the parametric design definition was set up: it detects the distance from the user’s hands to the pieces in the design and then moves them accordingly.

The vertex color shader used in the Unity Editor is not supported in the Magic Leap, so the pieces all keep the same color in this demo.

What jazz, parametric design and spatial computing have in common

I have written a new Medium post for the Virtual Reality Pop publication where I discuss jazz, parametric design and spatial computing. You can check it out here.

Daylight simulation as experienced in virtual reality

Geometry streaming in Augmented Reality

At IrisVR and every quarter, we stop everything to work on hack projects for a couple of days. In Q1 of this year, I decided to develop an Augmented Reality app that could stream objects and changes made to their geometry in real time. ARCore, Google’s augmented reality SDK for Android, is now available for developers and Unity has great support for it. I thought it would be fun to test it by creating an Augmented Reality environment that uses Firebase as the backend to send information back and forth between the computer, where the geometry is being created in Grasshopper, and the phone (a Pixel 1), where said geometry gets populated and rendered. Check it out below!

How Open Source Enables Innovation

In September I spoke at the AEC Tech Symposium. With Mostapha Roudsari, we discussed how some of the most recent web-based development projects at CORE studio – including VRX – are enabled by open-source initiatives and how these platforms are being used for better collaboration inside and outside Thornton Tomasetti.

VRX: Virtual Reality for Building Information Models

This past year at Thornton Tomasetti’s CORE studio, I have been designing and developing VRX – a project to view Building Information Models in Virtual Reality. This web application allows the model creator to guide others in a shared virtual environment using the Google Cardboard. I just published a blog post about it, and you can read more about it here.

VR workshop at ACADIA!

Next week, I’ll be teaching a workshop called Prototyping Experiential Futures at the Association for Computer Aided Design in Architecture (ACADIA). The 3-day session will be co-taught with Woods Bagot’s Director of Technical Innovation Shane Burger. See description below and sign up!

The field of Interaction Design has come to dominate our experience of the digital world. As our physical world increasingly takes on digital threads in our everyday experiences, the role of the architect needs to actively engage IxD for the built environment. We need to move from prototyping form to prototyping experience.

Applications of parametric design tools in Horizon House

Tomorrow we will be presenting Horizon House at the Graduate School of Design as part of the program of ‘Innovate Talks’, introduced by the Chair of the Department of Architecture Iñaki Abalos and moderated by Professor Mark Mulligan. We will be explaining how the competition asked for a sustainable solution for a retreat in nature set in rural Hokkaido (Japan) and we envisioned a house built with re-used local materials.

In March of 2013, I created a simple Grasshopper model that allowed for geometric explorations but also provided a general overview of material needs. You can check out the definition and some of the iterations below, extracted from a report I submitted at the end of the Spring semester. In addition, I have attached some of our original construction drawings.

Want to know more about Horizon House? Go here and here

![]()

![]()

MDesS Thesis mid-term review

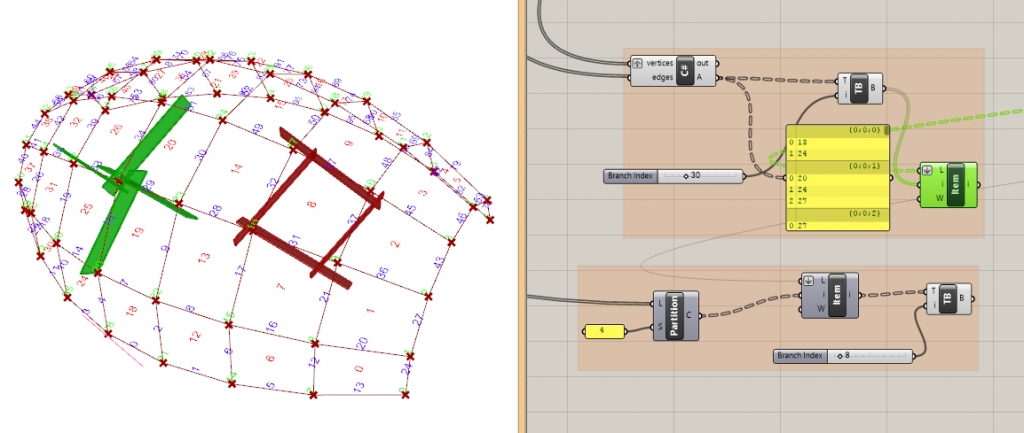

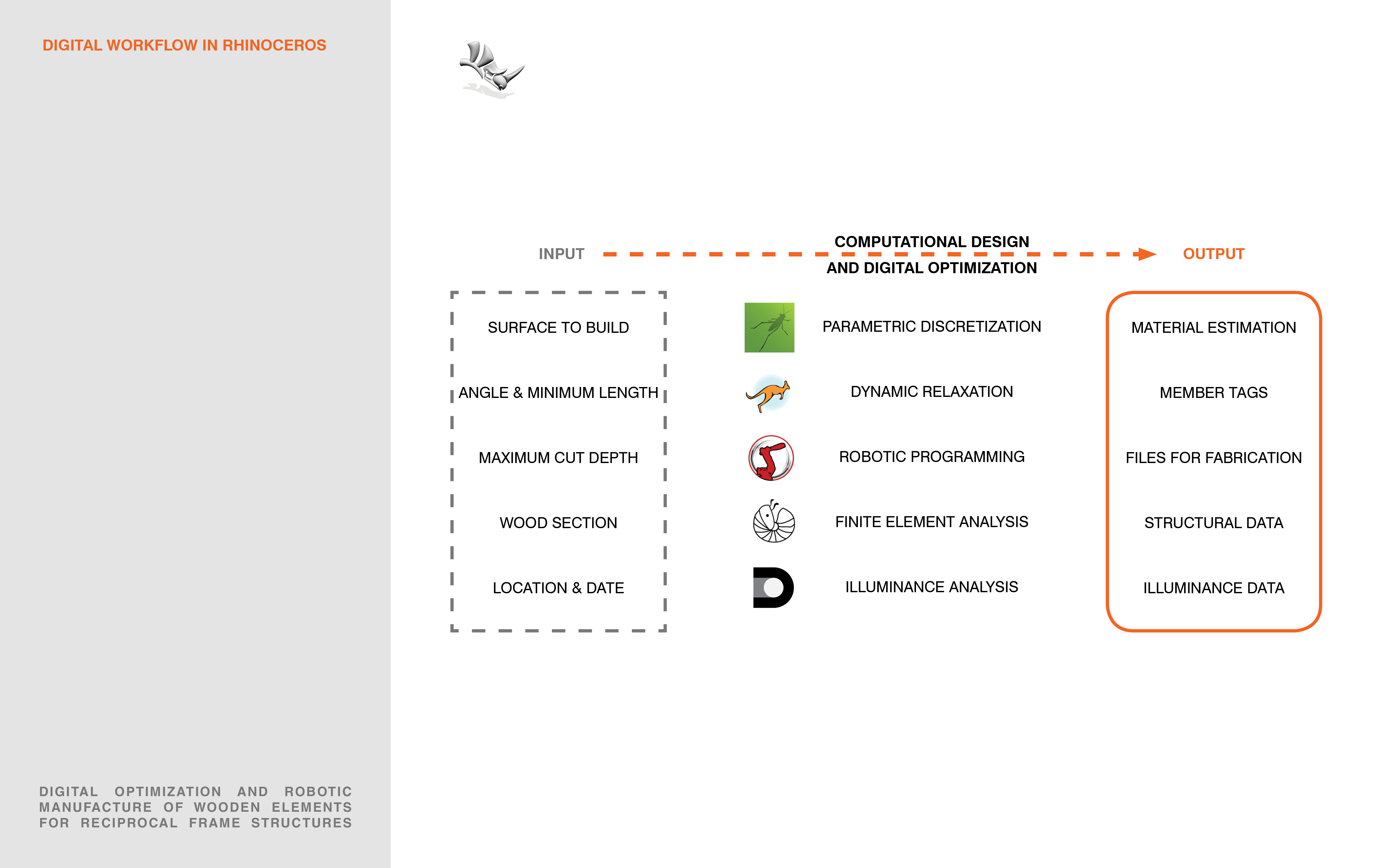

This will be part of my thesis mid-term presentation on digital design and robotic manufacture of wooden reciprocal frame structures, which will take place next week. The digital workflow described is under development.I have made some tests using the robot and it seems to be going in the right direction. I will post pictures of the prototypes in the next few days.

Among other features of this workflow, the designer can access each larger ‘quad’, by using a path number that is the same of that of the original face it belonged to, while they can also retrieve the smaller configurations, which respond to the numbering of the vertices. More features coming soon!